Navigating Mars with Rust: Helping the Rover See In Computer Vision

by Stephen Hedrick –

In the first installment of the Rust Rover Navigation project, I walked through developing a pathfinding algorithm that would autonomously chart a course and dynamically re-route an optimal path to a goal in a complex and uncertain environment.

As AdaCore’s GNAT Pro for Rust Product Manager, I am excited to now apply the Rust pathfinding / re-routing program components (which we tested in a virtual Rust / WASM simulation environment) to the actual rover robot. Our algorithm relies heavily on the rover’s onboard sensors to detect obstacles so it can adapt and plan accordingly. In this update, I’ll explore just how important the Rover’s capability of sight is and how to implement a solution that works with the existing pathfinding program we’re bringing with us from the first phase of the project.

Taking Stock

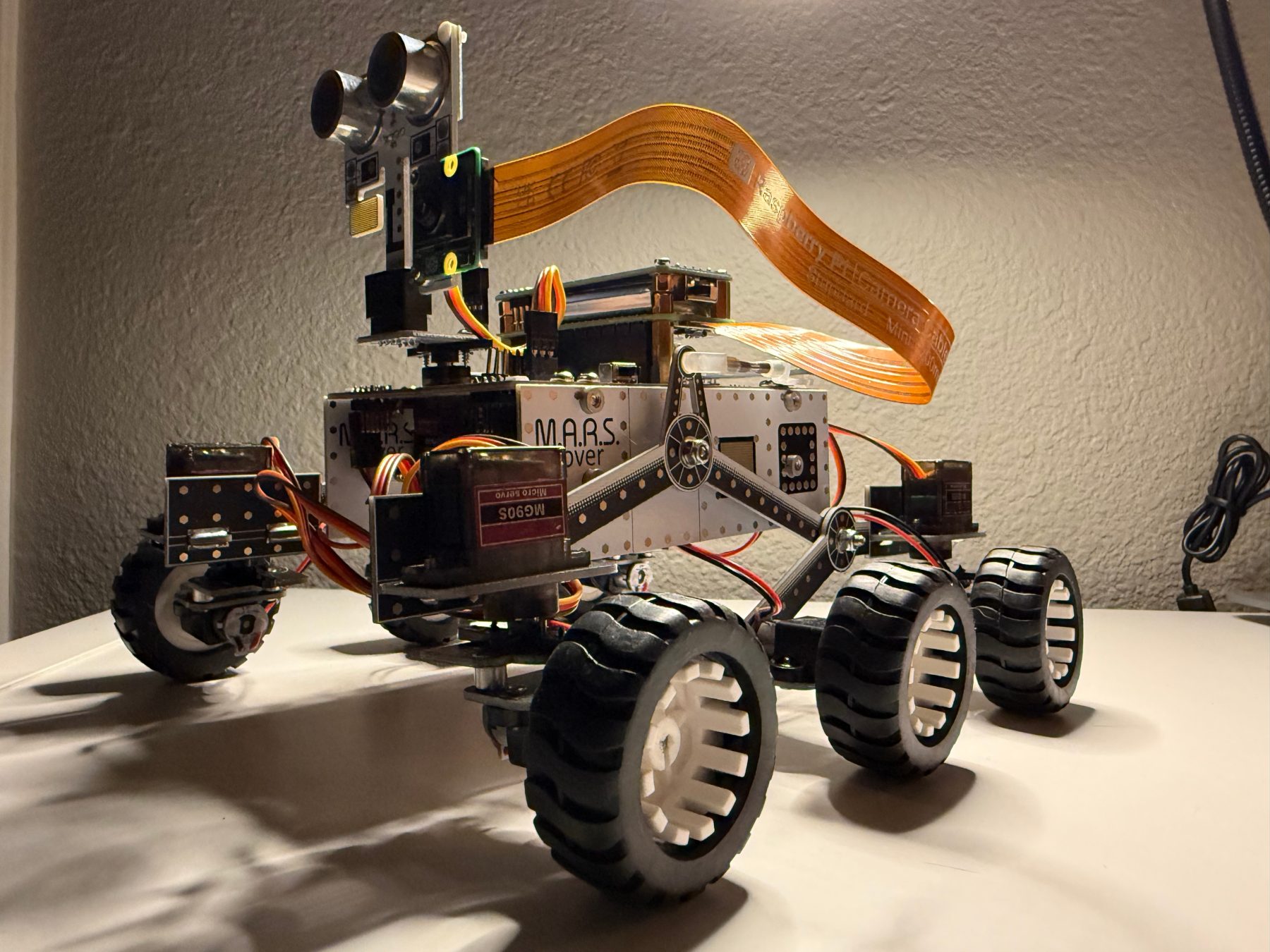

The transition from virtual to physical began optimistically enough. My colleagues at AdaCore had already shown us how they had built an Ada Mars Rover and how Rust could work well with SPARK while it controlled the 4tronix Mars Rover’s drivers in a simulated environment. But now the time had come to bring the Rust Rover from simulation to the real world.

The 4tronix Mars Rover kit comes conveniently well-equipped for an educational robotics platform. Six independently controlled motors provide differential steering, the chassis and body accommodate various circuitry and GPIO pins, the mast includes a mounted ultrasonic sensor and is affixed to the body by a rotational motor, and the wheels lock in with rubber tires for traction. But translating our pathfinding D* Lite algorithm from simulation to the real world revealed a fundamental gap: environmental perception.

The kit's ultrasonic sensor works exactly as advertised, providing approximate distance measurements to obstacles directly ahead in a cone-shaped field of visibility. However our rover will be navigating complex scenarios that require a detected object’s precise distance, size, edge proximity, and potential trajectory & heading information - All data beyond the scope of an ultrasonic sensor. Our rover needs to distinguish between a wall and a doorway, identify whether an obstacle is moving, movable, or fixed, and recognize navigation markers like tape lines or gradients. A single distance measurement couldn't provide any of this.

Applying Theory

The solution seemed obvious. Add a camera, implement computer vision, feed detected obstacles and their properties into our validated D* Lite algorithm and web interface. What followed was a journey through the particular challenges of embedded computer vision, the surprising limitations of popular ML frameworks, and ultimately, the creation of something that has been theoretically possible, though I could not find an existing example to learn from in my research: a hybrid OpenCV embedded solution running in Rust on a Pi Zero 2W.

The SBC

The 4tronix rover ships in two flavors: one with a BBC Micro:bit, another with a Raspberry Pi Zero. Having wrestled with Rust on Raspberry Pi's Linux environment before, and knowing less about BBC Micro:bit beyond its educational pedigree, the choice seemed clear. But even the Pi Zero felt underpowered for real-time computer vision. A quick upgrade to the Zero 2W bought us quad-core processing at 1GHz with 512MB of RAM. Still modest, but as I discovered (and talk about further down the page), just barely enough.

Even having upgraded our SBC, I was still a bit worried about how well it could handle real-time machine learning image processing alongside our Rust D*-Lite pathfinding and re-routing algorithm and 4-layer logic architecture.

A Note on Powering the Rover

Power rarely gets mentioned in robotics tutorials, but it's the difference between a demo and a system. The kit's 4x AA batteries might sustain basic motor control, but add computer vision, pathfinding, and a locally streaming access point with a web interface, and you're looking at maybe 15 minutes before your sophisticated autonomous rover becomes a fancy paperweight. The addition of a Waveshare UPS HAT with a 10,000mAh LiPo battery helped power our system, though the additional weight is certainly a factor we’ll need to consider in our later steering calculations.

Rust Computer Vision Decisions

Researching computer vision on resource-constrained systems revealed a stark reality: almost no one runs OpenCV with Rust on a Raspberry Pi, let alone a Pi Zero 2W. The few examples I found were Python-based, running on Pi 4s with 4GB of RAM, or using pre-compiled C++ binaries. The Rust ecosystem for embedded computer vision was essentially uncharted territory.

The initial research was disheartening. TensorFlow Lite demanded 300MB just to initialize. PyTorch wouldn't even install on ARM without custom compilation. The standard OpenCV-Python tutorials assumed you had gigabytes to spare, that you would pair it with a limited model such as MobileNet, or using a pre-compiled Python version. The real challenge wasn't just memory, it was finding a solution that could guarantee deterministic timing while running alongside our D* Lite pathfinding algorithm, locally served web interface, and motor control systems.

After weeks of experimentation, I discovered that YOLOv8 models could be exported to ONNX format and run through Microsoft's ONNX Runtime, which had experimental Rust bindings. This was promising, but no one had documented running this combination on anything less powerful than a Pi 4. The path forward would require pioneering a new approach: creating custom OpenCV bindings for Rust that could work within our 512MB memory envelope while maintaining real-time performance.

The fundamental issue wasn't just Python's resource consumption, it was the inability to guarantee safety-critical behavior. Consider what happens when the GC (garbage collector) kicks in while approaching an obstacle:

// The solution we needed: deterministic obstacle response

impl VisionSystem {

fn process_critical_frame(&mut self) -> Result<NavigationAction> {

// This MUST complete in < 50ms, EVERY time

let detection_start = Instant::now();

let detections = self.run_inference()?;

// Rust's ownership system guarantees no GC pause here

let critical_object = detections.iter()

.min_by_key(|d| d.distance_estimate as i32)

.ok_or(anyhow!("No detections"))?;

// Compile-time guarantee: we handle every case

match (critical_object.class_name.as_str(), critical_object.distance_estimate) {

("person", d) if d < 1.0 => Ok(NavigationAction::EmergencyStop),

(_, d) if d < 0.5 => Ok(NavigationAction::EmergencyStop),

_ => Ok(NavigationAction::Continue)

}

}

}The Python + Tiny YOLOv3 + COCO system exhibited a form of confusion that would have been amusing if it weren't so frustrating. When the camera pointed at me, it confidently declared I was either an elephant or a chair, never a person, and that I was either 10m or .05m away. Every obstacle became a person with absolute certainty, except actual people. Most critically, it couldn't determine whether the rover's path was clear or blocked, rendering it useless for navigation.

The failure went deeper than misclassification. Memory consumption ballooned to over 200MB, the garbage collector introduced unpredictable pauses up to 100ms, and CPU usage hovering around 80% left nothing for pathfinding. On a platform where every byte and cycle matters, Python's overhead was untenable.

With Python, this same logic could take 30ms or 300ms depending on GC timing. In Rust, it's consistently 45-50ms, every single time.

| METRIC | Python + Tiny YOLOv3 | Rust + YOLOv8 / OpenCV / ONNX | Critical Element Impact |

| Memory Usage | 200-300MB | 45-55MB | Allows concurrent pathfinding |

| Inference Time | 30-300ms (variable) | 45-50ms (consistent) | Predictable Obstacle Response |

| System Crashes | ~50% over 1hr | <1% over 24 hours | Mission / Journey Reliability |

| Obstacle Detection Range | 2-3m (when working) | 5 - 8m reliable | Earlier hazard avoidance |

| Grid Mapping Accuracy | ±2 cells | ±0.5 cells | Precise Navigation |

| Concurrent Systems | 2 max | 5+ | Complete Autonomy |

OpenCV for Rust on Constrained Hardware

The challenge of running OpenCV with Rust on a Pi Zero 2W had no existing solution. The opencv-rust crate requires compilation from source, consuming over 3GB during build. Our rover's SD card, already hosting the OS and our navigation stack, couldn't accommodate this and continuously crashed upon compilation. The solution required a novel approach: hybrid compilation with pre-built bindings.

// Custom minimal OpenCV bindings - opencv-embedded/src/lib.rs

use std::ffi::{c_char, c_void, c_int, CString};

use std::ptr::NonNull;

use std::marker::PhantomData;

#[link(name = "opencv_dnn")]

#[link(name = "opencv_imgproc")]

extern "C" {

// Only bind what we absolutely need

fn cv_dnn_readNetFromONNX(path: *const c_char) -> *mut c_void;

fn cv_dnn_Net_setInput(net: *mut c_void, blob: *mut c_void) -> c_int;

fn cv_dnn_Net_forward(net: *mut c_void) -> *mut c_void;

fn cv_dnn_Net_delete(net: *mut c_void);

}

// Safe Rust wrapper maintaining memory safety

pub struct DnnNet {

ptr: NonNull<c_void>,

_phantom: PhantomData<*mut c_void>,

}

impl Drop for DnnNet {

fn drop(&mut self) {

unsafe {

cv_dnn_Net_delete(self.ptr.as_ptr());

}

}

}This approach lets us link against system-installed OpenCV libraries while maintaining Rust's safety guarantees at the FFI (foreign function interface) boundary. The binary size dropped from an estimated 100MB to under 15MB, and memory usage stabilized at 50MB including the loaded YOLOv8 model.

Distance Estimation and Grid Mapping

The rover's navigation required translating visual detections into our pathfinding grid. Without depth sensors, we implemented monocular distance estimation based on object size assumptions:

impl VisionSystem {

fn calculate_distance(&self, height: f32, class_name: &str) -> f32 {

let object_heights = [

("person", 1.7), ("car", 1.5), ("truck", 3.0),

("bicycle", 1.0), ("chair", 0.8), ("bottle", 0.25),

];

let real_height = object_heights.iter()

.find(|(name, _)| name == &class_name)

.map(|(_, h)| *h)

.unwrap_or(0.5);

let focal_length = 500.0;

if height > 0.0 {

(real_height * focal_length) / height

} else {

10.0

}

}

}This transformation had to be exact where a miscalculation could place an obstacle in the wrong grid cell, causing the rover to plan a path directly into danger. Rust's type system enforced unit correctness at compile time, preventing the meter/pixel confusion that plagued our early Python prototypes.

Safety Through Types

Where Rust truly shone was in enforcing safety-critical design patterns at compile time. The rover must never hit an obstacle, must slow for animals & people, and must stop and assess when it detects a change in elevation. These aren't guidelines; they're requirements enforced by the type system:

#[derive(Debug, Clone, Copy, Serialize, Deserialize)]

pub enum NavigationAction {

Continue,

SlowDown,

Stop,

EmergencyStop,

}

impl VisionSystem {

fn determine_action(&self, class_name: &str, distance: f32) -> NavigationAction {

match (class_name, distance) {

("person", d) if d < 1.0 => NavigationAction::EmergencyStop,

("person", d) if d < 2.0 => NavigationAction::Stop,

("person", d) if d < 3.0 => NavigationAction::SlowDown,

(_, d) if d < 1.5 => NavigationAction::Stop,

(_, d) if d < 3.0 => NavigationAction::SlowDown,

_ => NavigationAction::Continue,

}

}

pub fn get_navigation_command(&self) -> NavigationAction {

let detections = self.current_detections.read();

if detections.is_empty() {

return NavigationAction::Continue;

}

// Find closest obstacle and return its action

detections.iter()

.min_by(|a, b| a.distance_estimate.partial_cmp(&b.distance_estimate).unwrap())

.map(|d| d.action)

.unwrap_or(NavigationAction::Continue)

}

}When handling multiple detections:

// Real-world scenario: multiple obstacles

pub fn process_multiple_detections(&self, detections: &[Detection]) -> NavigationAction {

// Safety-critical: always respond to the most dangerous obstacle

detections.iter()

.map(|d| (d.distance_estimate, self.determine_action(&d.class_name, d.distance_estimate)))

.min_by_key(|(dist, _)| (*dist * 100.0) as i32)

.map(|(_, action)| action)

.unwrap_or(NavigationAction::Continue)

}The compiler won't let you forget to handle a case. Every possible obstacle type must map to a navigation command. This isn't a runtime check that might fail; it's a compile-time guarantee.

The Hybrid Architecture

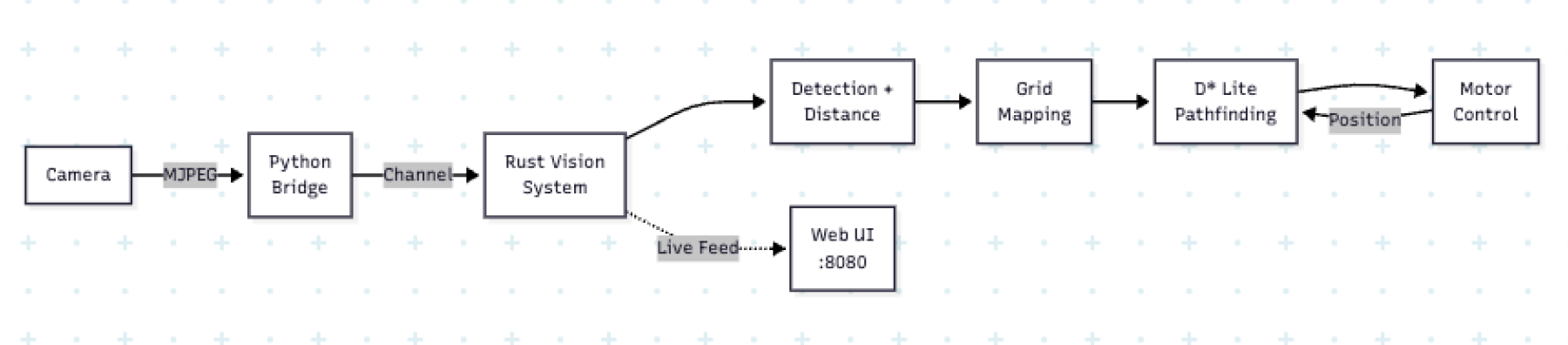

The final architecture represents pragmatic engineering. Rust handles 95% of the system, Python manages the 5% where hardware access requires it:

# Minimal Python bridge for video access

class CameraBridge:

def capture(self):

# Returns raw bytes to Rust

return self.camera.capture_array().tobytes()// Rust processes everything else

impl VisionSystem {

async fn vision_loop(&mut self) {

let frame = Python::with_gil(|py| {

// Get raw frames from Python

let camera = py.import("camera_bridge")?;

camera.call_method0("capture")?.extract::<Vec<u8>>()

})?;

// All processing in Rust

let detections = self.process_frame(&frame).await?;

let commands = self.plan_path(detections)?;

self.execute_commands(commands).await?;

}

}This architecture delivers predictable performance. No garbage collection pauses during critical navigation decisions. No memory leaks accumulating over hours of operation. No buffer overflows corrupting sensor data.

Lessons from the Edge

Building computer vision on severely constrained hardware taught valuable lessons about Rust's role in embedded AI and safety critical systems relying on interoperability:

Memory predictability matters more than raw performance

The Python system was technically faster when it worked, but garbage collection pauses make it unsuitable for real-time navigation. Rust's consistent 50ms processing time beat Python's 30ms average with 100ms spikes.

The Rust ecosystem for embedded vision is nascent but viable

Finding that YOLOv8 + ONNX Runtime + custom OpenCV bindings can run on a Pi Zero 2W, opening possibilities for safety-critical vision applications on extremely constrained hardware. The key was abandoning the "compile everything from source" mentality and embracing hybrid approaches.

Compilation isn't always necessary

The breakthrough came from realizing we could link to existing libraries rather than compile them. This seems obvious in retrospect, but the Rust ecosystem's preference for source compilation obscured this option.

Type safety scales down

The same type system that makes Rust excellent for large systems proves invaluable on embedded platforms. When you can't attach a debugger and crashes mean mission failure, compile-time verification becomes critical.

Memory predictability enables complex systems on simple hardware

By eliminating garbage collection and using Rust's ownership model, we could run vision processing, pathfinding, motor control, and web streaming simultaneously on 512MB of RAM. The same system in Python would require at least 2GB and still couldn't guarantee real-time performance.

Why These Points Matter

The approach we took goes beyond rovers. The same techniques apply to any safety-critical embedded vision system such as autonomous vehicles navigating city streets, agricultural drones monitoring crops, or industrial robots working alongside humans. When failure isn't an option and resources are constrained, Rust's guarantees become essential.

The interoperability challenges we faced mirror those in safety-critical industries. Aerospace systems often need to integrate legacy C code with modern Rust safety guarantees. Automotive systems must bridge multiple languages while maintaining strict timing requirements. The rover in our project, operating with just 512MB of RAM, faced similar constraints to embedded medical devices, small operational drones, agricultural devices, and others that must process sensor data in real-time without failure under environmental pressures.

What made this possible was Rust’s ability to provide safety guarantees even at FFI boundaries. While we couldn’t make the C++ OpenCV code safe, we could contain its unsafety, ensuring that any memory corruption would be caught at the Rust boundary rather than propagating through the system. This is the same approach used in safety-critical automotive systems where Rust is gradually replacing C++ in ADAS (Advanced Driver-Assistance Systems) components.

The evolving project structure reflects the multi-language, safety-critical approach:

~/ScoutNav/

├── hardware/ # Physical rover control system

│ ├── src/

│ │ ├── main.rs # Main control loop & system orchestration

│ │ ├── vision.rs # Vision system

│ │ ├── web.rs # Web server & WebSocket handler

│ │ ├── motor_control.rs # GPIO motor control

│ │ ├── pathfinding.rs # Navigation algorithms

│ │ └── vision_bridge.py # Python bridge for frame capture

│ ├── static/

│ │ └── index.html # Web interface with live feed display

│ ├── opencv-embedded/ # Custom OpenCV bindings for edge detection

│ └── target/release/rover # Compiled binary

│

└── pathfinding/ # D*-Lite grid-based navigation (previous project)

├── src/

│ ├── components/ # Yew UI components

│ ├── pathfinding/

│ │ ├── dstar_lite.rs # D*-Lite implementation

│ │ └── pathfinder_trait.rs

│ └── rover.rs # Agent FSM

└── index.html # Pathfinding visualization

What’s To Come

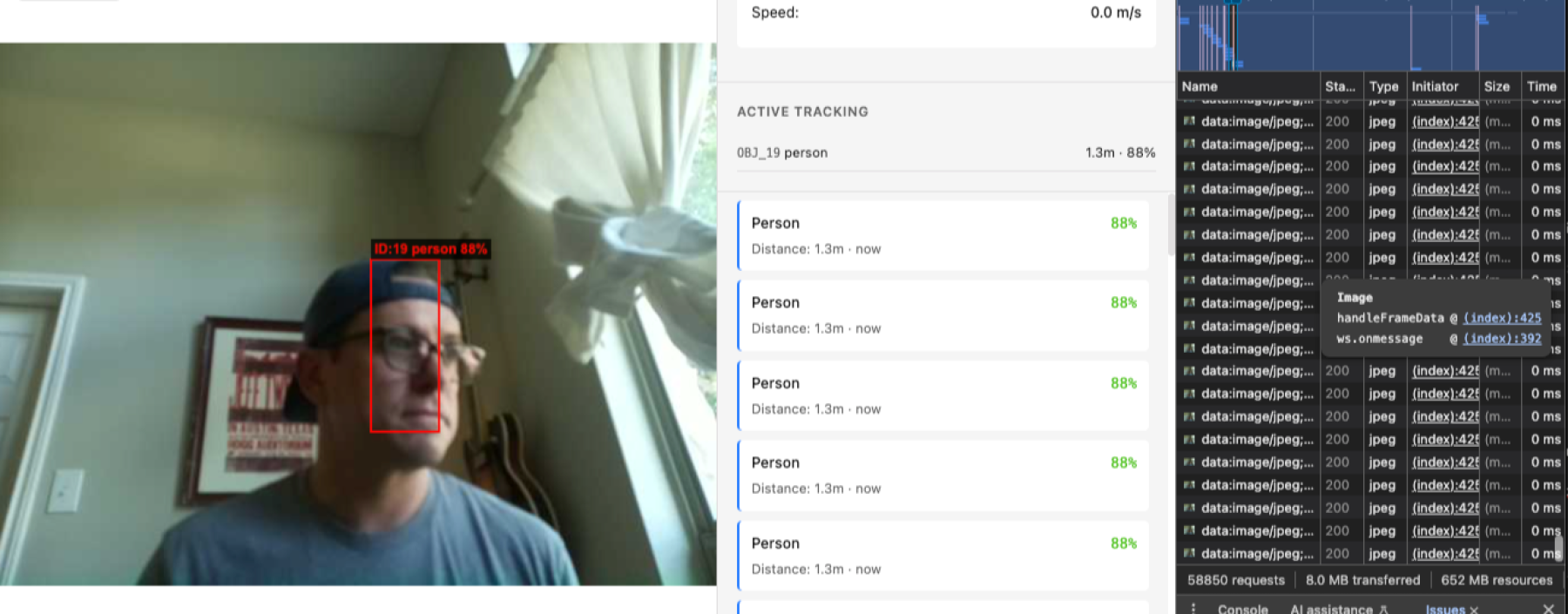

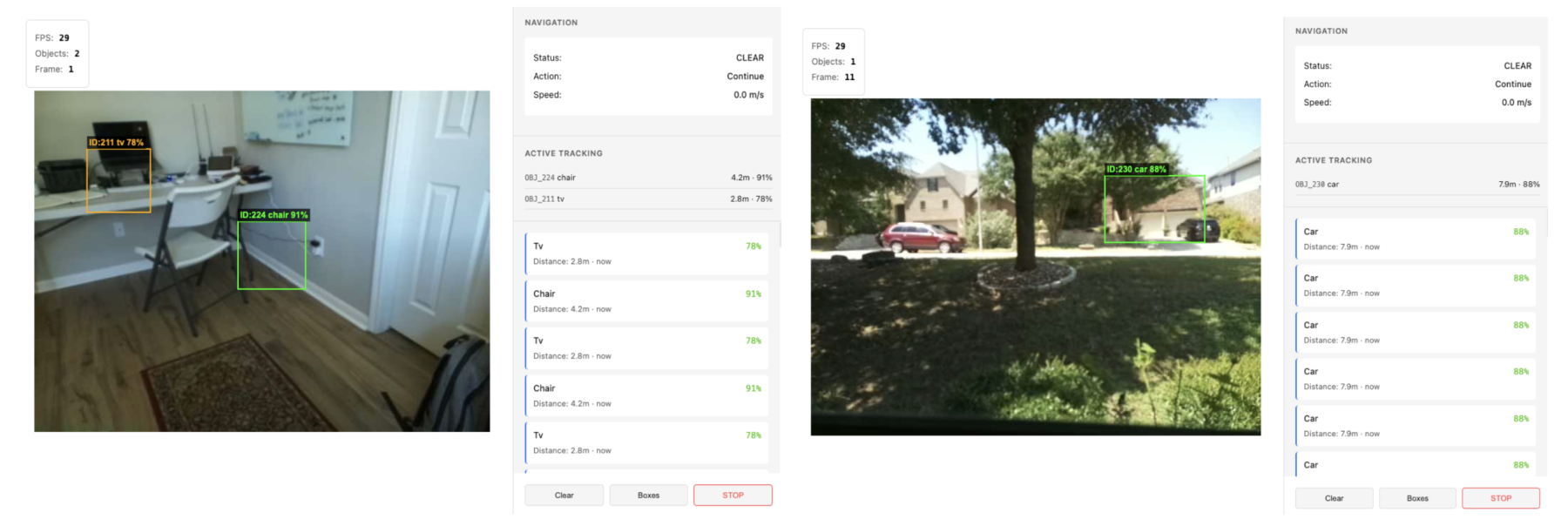

The vision system now reliably detects obstacles, classifies them by safety priority, and feeds structured data to our web interface, D* Lite pathfinding algorithm, and other system components. The camera sends Pi Zero 2W the video stream for processing and orchestration. Frame rates stabilized at 13-15 FPS, sufficient for a rover moving at walking speed.

But vision is only part of autonomous navigation. The next challenge: integrating vision, pathfinding, rover localization, and motor control into a cohesive system that can navigate real environments. The rover must not just see obstacles but respond to them, not just plan paths but execute them.

The code for this Computer Vision chapter of the Rust Rover project is available at this repo. As an incremental update, I’ll be putting together a progress video later this month, as I’ve already begun implementing exciting improvements and preparations for the final blog for this year in October.

If you’d like to see the results of this project in person, I’ll be showing off the Rust Rover in all of its pathfinding glory at AdaCore's Denver Tech Day on September 16th - SIGN UP HERE.

I look forward to seeing you all in Denver and getting into the next phase of the Rust Pathfinding Rover project.