Extending Priority Inheritance Beyond Protected Operations

by Pat Rogers –

In some programming languages, a “mutex” can be used to provide mutually exclusive access (hence the name) to some set of objects that are shared among multiple competing threads. All these threads follow the same usage pattern:

- the thread calls a routine on the mutex in order to block until it is appropriate to continue,

- upon return from the call that thread executes an arbitrary sequence of statements,

- after that sequence, the thread calls another operation on the same mutex to signal that some other thread can now be allowed to return from their call in step one.

A mutex is implemented so that, for any given mutex object, only one caller at a time is allowed to return from the call in step one. Therefore, step one is said to acquire or seize the mutex object, and step three releases it. The result is that only one thread at a time will execute the statements in step two, hence with mutually exclusive access to the manipulated resources.

In "priority-inheriting" mutexes, the mutex is assigned a priority and the caller threads' priorities are raised to that value as part of returning from step one, and remain there until the call in step three returns. As a consequence, the sequence of statements in step two executes at that inherited priority. That's appropriate because we want to minimize the execution time in which a lower priority task blocks a higher priority task; in that situation the priority system is subverted.

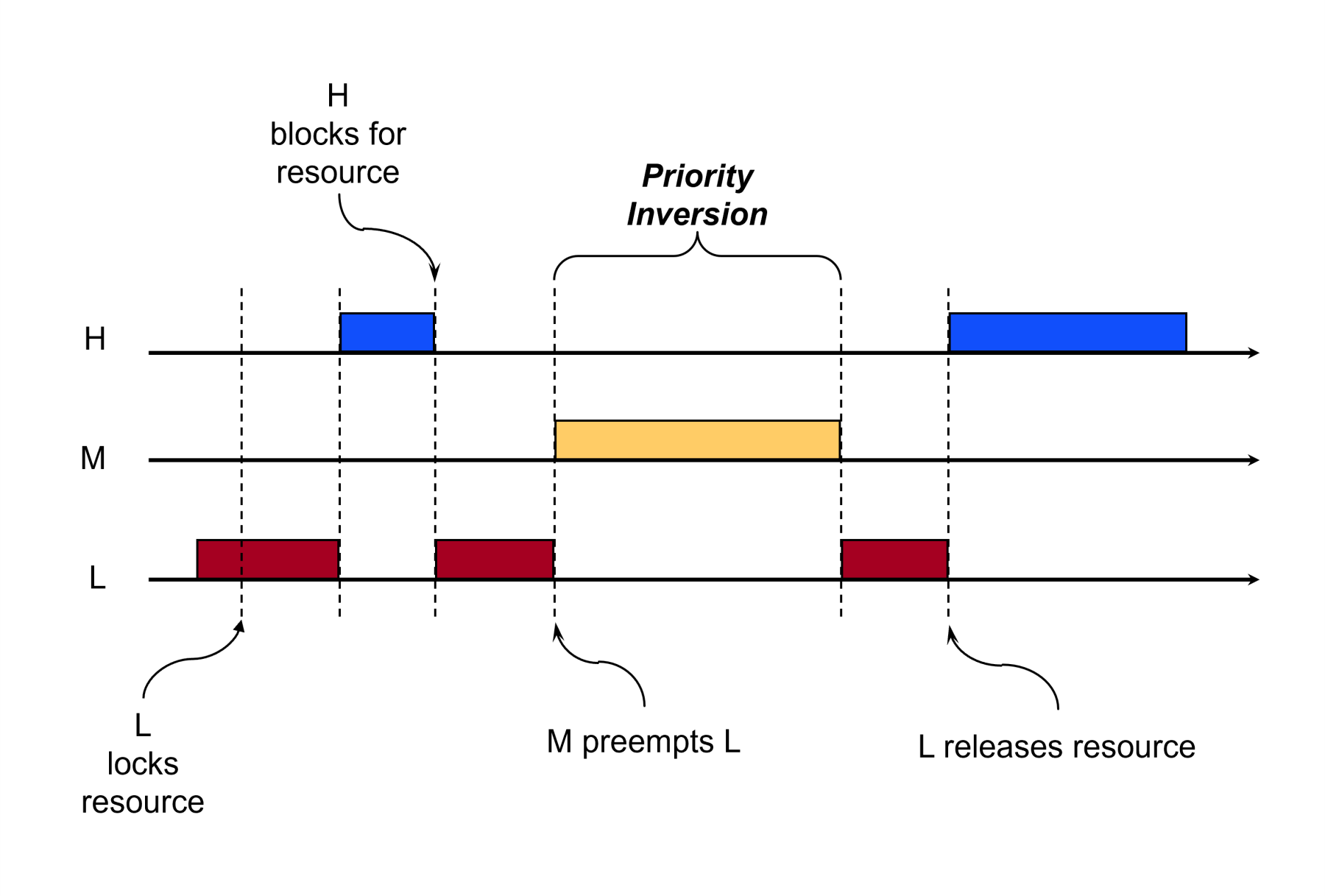

To see how it is subverted, consider the case in which there are three tasks: L, M, and H, i.e., tasks with low priority, medium priority, and high priority respectively. Let’s say that L currently holds a mutex that H also requires. H blocks because it awaits the mutex. L is running, but is then preempted by M, preventing L from executing and releasing the mutex that H requires. Task M is then indirectly blocking the high priority task H, a situation known as a “priority inversion” depicted below:

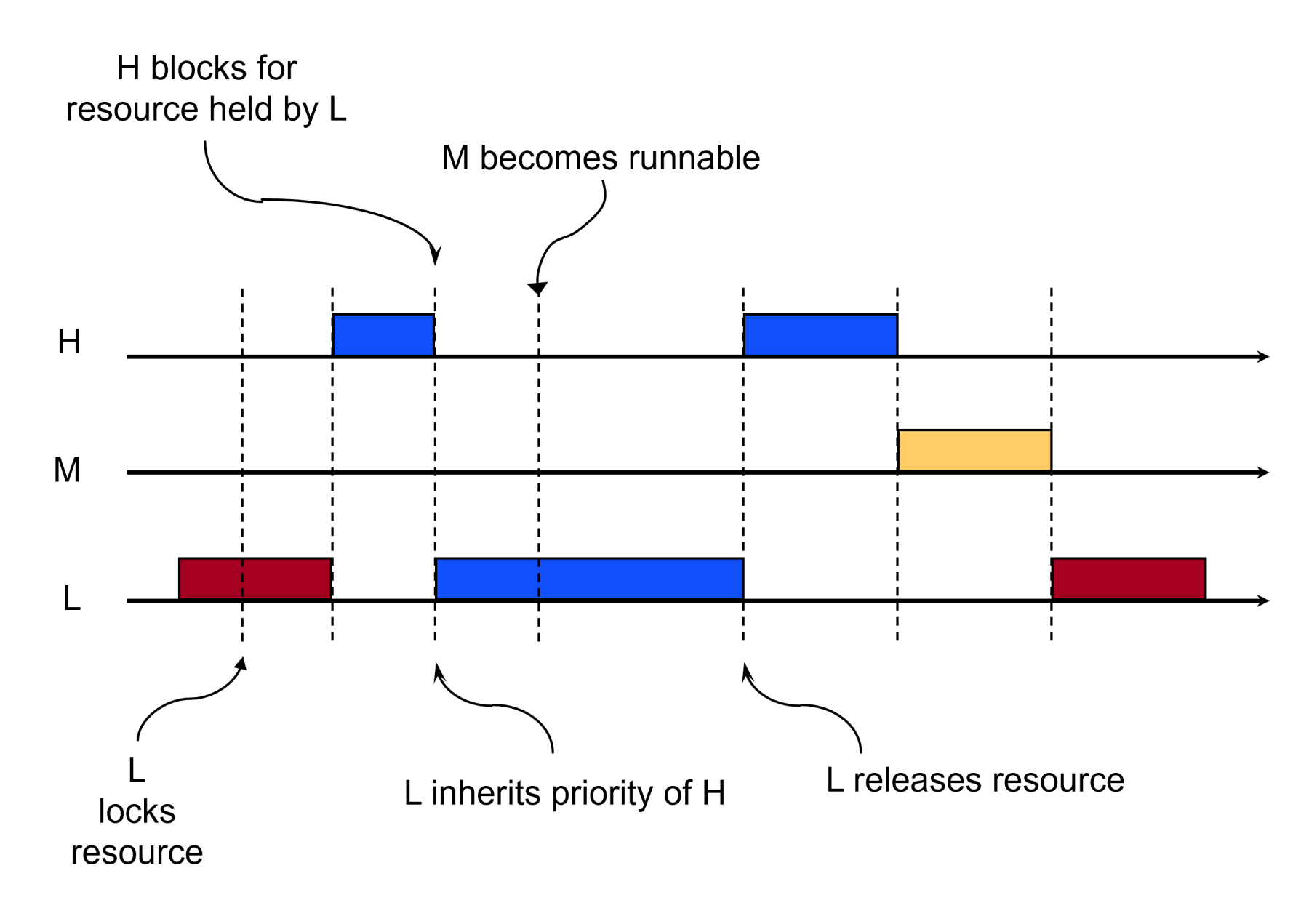

If, on the other hand, task L executes at the priority of H while holding the mutex shared with H, task M could not preempt L while L holds the mutex H requires. That’s the effect of basic priority inheritance: L inherits the priority of H while holding the mutex required by H, as shown below:

The point at which L's priority becomes that of H depends on the specific inheritance protocol in place. We can ignore that for this discussion.

Ada's protected objects have priority inheritance semantics. A protected object (PO) can have a priority assigned, and any calls to the associated protected operations will execute those operations at the PO priority. In effect caller tasks inherit the PO priority. Additionally, this priority inheritance works across calls from one PO to another.

However, there is a significant difference. Although the call to a protected operation executes at the priority of the enclosing PO, the caller's priority reverts to what it was at the point of the call when the call returns. As a result, if we implement a "priority-inheriting" mutex type as a protected type, the sequence of statements in step two above will not execute at the PO priority applied to the call in step one. Statements occurring after the call in step one are not part of that protected operation so they execute at the reverted priority.

Ada is an extremely expressive language for concurrent programming,

so of course there is a way to define a protected type that does provide

this sort of priority extension. We'll show how to do that in this

blog. Note that the approach is not specific to mutex abstractions. We

use them to illustrate the approach because they are simple and

well-known.

As you will see, there are two ways to apply protected objects to

achieve mutually exclusive access, reflecting the fact that there are,

generally speaking, two general idioms for applying protected types in

Ada.

First Idiom for Applying Protected Objects

In the first idiom, the PO implements all the application-specific

functionality for the shared resources under consideration. We declare

the shared data in the PO private part and declare the protected entries

and protected procedures that manipulate that data in the visible part.

The compiler won't allow any direct references to the hidden data from

outside of the PO, so the visible operations must be called by client

tasks to manipulate the data. That means the statements in step two are

necessarily expressed by the protected operations themselves. Readers

familiar with the classic "monitor" construct will recognize it as the

conceptual foundation for protected objects used this way.

For example, let's say we want to protect a product serial number

variable from concurrent manipulation by multiple caller tasks. These

tasks need to get the next sequential serial number, which entails

incrementing the current value each time a task requests the next

number. We must prevent the increment from occurring concurrently,

otherwise the resulting race condition could occasionally provide

incorrect values to the callers. Therefore, the increment will be done

inside a protected procedure that provides the current value via

parameter and also increments the value before returning. We could

declare the protected object like so:

protected Serial_Number is

procedure Get_Next (Number : out Positive);

private

Value : Positive := 1;

end Serial_Number;

protected body Serial_Number is

procedure Get_Next (Number : out Positive) is

begin

Number := Value;

Value := Value + 1;

end Get_Next;

end Serial_Number;Whenever any task calls Serial_Number.Get_Next, the task will block until it has mutually exclusive access to the PO, and consequently to the Serial_Number.Value variable. At that point, Value is assigned to the formal parameter and then incremented. Once the procedure returns, the caller task can continue execution with their unique serial number copy. No race conditions are possible and the shared serial number value increments safely each time Get_Next is called.

However, we cannot always take this first approach, even though it is archetypal. We cannot do so, at least not portably, if the statements of a protected operation include a "potentially blocking operation." This is a term defined by the language for those constructs that are not allowed within protected operations, neither directly nor indirectly, because they may cause the caller task to yield the processor to another task. To understand why that can be a problem you need to understand the underlying approaches available for implementing the mutually exclusive access that protected operations provide.

Underlying Implementation Approaches for PO Mutual Exclusion

One implementation approach, typical when executing on an operating system, is to use an explicit locking mechanism provided by the OS. The run-time library code implementing the protected operations first acquires a dedicated OS lock and then later releases it when exiting.

But another approach is available (on uniprocessors), that does not use explicit locks. Instead, mutual exclusion is implemented via priorities, both task priorities and PO priorities.

Specifically, developers assign a priority to each protected object. Each PO priority must be the highest (the "ceiling") of all the priorities of those tasks that actually call the operations provided by the PO. Consequently, for any given PO, no task that calls that PO will have a higher priority than the PO priority. Because caller tasks inherit the PO priority (immediately), their calls execute with the highest priority of any caller task for that specific PO. Therefore, no other caller task can preempt any current caller executing within the PO. The current caller may be preempted, but not by a task that would also call that same PO. Thus, mutually exclusive access is provided automatically, and very efficiently. (This approach has other benefits as well that are not pertinent here.)

However, the priority-based implementation cannot work reliably if blocking is allowed within a PO operation. If the current caller could block inside a protected operation, some other task could then be allowed to continue execution, including possibly a task making a call to that same PO. In that case mutual exclusion would not be provided for that PO.

As a result, the language defines a number of potentially blocking operations and disallows them within protected operations. Any I/O operation is potentially blocking, for example, as are delay statements, but there are others as well. See the Ada RM, section 9.5 {34} for the full list.

So we may find ourselves with some application code that must be executed with mutually exclusive access, but that cannot be written as a protected operation. The code must be written outside of a PO, not within a protected object's operations, and not in anything called by those protected operations. That means we cannot apply the first idiom.

We need another way to provide the mutually exclusive access required, which leads us to the other idiom for applying protected types in applications.

Second Idiom for Applying Protected Objects

In the second idiom, the protected object implements no application-specific functionality whatsoever. Instead, it implements some tasking synchronization protocol that we want to apply to application (caller) tasks executing outside the PO. That protocol might be mutual exclusion, but can be anything.

In this idiom, data may be declared in the private part, but they are not application data; they exist purely for the sake of implementing the intended protocol. Likewise, the protected operations do nothing application-specific.

Instead, these protected objects block caller tasks until the synchronization protocol allows them to return from the call, thereby allowing them to resume execution consistent with the protocol. Consequently, there will always be one or more operations that block callers, and, depending on the protocol, other operations to unblock them. (We are using the term "block" loosely here, meaning the caller task is not allowed to return from the call. This blocking is distinct from the potentially blocking operations prohibited by the language.)

For example, we could have a protected object that implements the Readers-Writers synchronization protocol. In this protocol only one task at a time can write (update) the state of the shared objects, and writers must wait until there are no readers, but multiple simultaneous readers are allowed as long as there is no writer active. Such a protected object would have multiple protected operations, some to block callers until appropriate for the given read or write action requested, and some to signal the end of the read or write operation so that a pending request (if any) can be granted.

Other sophisticated protocols are possible, such as atomic actions and fault tolerant "conversations" involving atomic actions among multiple tasks. The possibilities are endless.

Note that protected declarations in this idiom are usually protected types, rather than protected objects, because the facilities they provide are application-independent. The mutex facility is a good example: only the application developer knows how many sections of code will require mutually exclusive access, i.e., how many mutex objects will be required.

We can use this idiom to define a protected type providing a basic mutex. (We will address priorities momentarily.) The protected operations will consist of two routines: one to acquire the mutex (step one) and one to release it (step three). Calls to these two PO operations can then bracket an application-specific sequence of statements that manipulate objects requiring mutually exclusive access (step two). But now this bracketed code can include some potentially blocking operations, if need be.

protected type Mutex is

entry Acquire;

procedure Release;

private

Available : Boolean := True;

end Mutex;

protected body Mutex is

entry Acquire when Available is

begin

Available := False;

end Acquire;

procedure Release is

begin

Available := True;

end Release;

end Mutex;In the code above, the variable Mutex.Available is declared within the protected private part, but it exists only to implement the locking semantics. There are no local application objects, unlike, for example, the serial number example.

The following code snippet illustrates using the Mutex type for the sake of controlling access to a shared variable. In particular, this is code for a message logging package. It provides a visible procedure named Enter that writes to a hidden, but nonetheless shared output file. The output file is shared because multiple tasks can call Enter concurrently. Here is the pertinent part of the logging facility's package body:

Log_Lock : Mutex;

Log_File : File_Type;

procedure Enter (Log_Entry : String) is

begin

Log_Lock.Acquire;

Put_Line (Log_File, Log_Entry);

Log_Lock.Release;

end Enter;In the example above, the body of procedure Enter first calls Log_Lock.Acquire. The call is not allowed to return until the caller task exclusively holds the logical lock associated with the Log_Lock object. Therefore, every subsequent statement executes with mutual exclusion relative to the Log_Lock object. In this case, there's only one such statement, the one that writes the string to the single shared output file. That's a blocking operation, but we're not in a protected operation so that's not a problem. Finally, the procedure calls Log_Lock.Release to relinquish the current caller task's hold on the Log_Lock mutex. If some other task was waiting to hold the Log_Lock object, that task can now return from its call to Acquire and can execute its write to the log file.

There are issues unaddressed in the three-step client protocol illustrated by the code above, especially error cases. For example, if an exception is raised in step two, we need to ensure there is exactly one call to Release. There are other abstractions that address these client usage issues, but we want to focus on mutexes in this blog entry, particularly regarding priorities.

Priority Extending Mutex Type

Our simple Mutex type doesn't mention priorities because priorities themselves are optional. Concurrent programming does not require priorities. We will assume, however, that priorities are used by the application and supported by the underlying run-time library.

As we mentioned earlier, Ada standard priority semantics for protected objects includes priority inheritance: a call to a given protected operation executes at the priority of the PO, which will be greater than or equal to the priority of the task making the call.

But that inherited priority only applies during the corresponding protected operation. After the protected operation completes, the priority of the caller task will revert to the value it had at the point of the call.

When we are using a protected object in the first general idiom, that end-point for the priority inheritance is appropriate because the protected operations do all the application-specific manipulation of the shared data. But when we are applying protected objects in the second general idiom, the protected operations do not operate on any shared application variables. For the case of the Mutex type, after Acquire returns we know that no other task holds the lock so it is safe to execute the subsequent statements. However, those statements do not execute with the priority that Acquire had. That might or might not be an issue.

If it is an issue, we will want another kind of Mutex type, one that extends the caller's inherited priority to the sequence of statements following the call to Acquire. Only when Release is called should the caller's priority revert to the original value it had prior to the call to Acquire. Here's how you can express that:

Protocol_Error : exception;

-- Raised when client tasks do not follow the protocol required by the

-- given blocking abstraction. This is an exception shared by various

-- protected types in the same package.

protected type Priority_Extending_Mutex

(Ceiling : System.Priority)

with

Priority => Ceiling

is

entry Acquire;

-- Upon return, the caller's base priority is set to the value of

-- Ceiling and the caller is the "current owner" of the mutex.

procedure Release;

-- Upon return, the caller's base priority is set back to the value

-- it had at the point of the call to Acquire.

--

-- Raises Protocol_Error if the current owner is not the task

-- calling Release.

private

Available : Boolean := True;

Current_Owner : Task_Id := Null_Task_Id;

Owner_Base_Priority : System.Priority;

end Priority_Extending_Mutex;(The package declaration containing the protected type declaration will include a with-clause and use-clause for package Ada.Task_Identification, as well as a with-clause for package System.)

First, note that this new type, Priority_Extending_Mutex, has a discriminant of type System.Priority. Consequently, distinct objects of the type can have different priorities because different discriminant values can be specified by the application developer.

Next, note that there is an additional local variable, named Owner_Base_Priority. Now we have three protected variables, but like the earlier Mutex type, none of them are application-oriented.

protected body Priority_Extending_Mutex is

entry Acquire when Available is

begin

Available := False;

Current_Owner := Acquire'Caller;

Owner_Base_Priority := Get_Priority (Current_Owner);

Set_Priority (Ceiling, Current_Owner);

-- The caller's base priority will now retain, after the call to

-- Acquire returns, the elevated value it inherited (ie Ceiling).

-- This takes effect after the end of the protected action.

end Acquire;

procedure Release is

begin

if Current_Owner = Current_Task then

Set_Priority (Owner_Base_Priority, Current_Owner);

-- This takes effect after the end of the protected action.

Available := True;

Current_Owner := Null_Task_Id;

else

raise Protocol_Error;

end if;

end Release;

end Priority_Extending_Mutex;(The package body containing this protected body will have a with-clause and a use-clause for package Ada.Dynamic_Priorities.)

The bodies for Acquire and Release are similar to those of the simple type Mutex, except that they also control the caller’s priority. Procedure Acquire sets the caller's base priority to the PO ceiling priority value. As a result, the subsequent statements after the call to Acquire will execute at this ceiling priority. When procedure Release is called, as before it first checks that the caller is the current owner of the lock. If so, Release also sets the caller task's base priority back to the value it had when Acquire was called. (At least one caller’s base priority will necessarily be the same as the PO ceiling priority, in which case neither Acquire nor Release actually change it.)

This priority manipulation will also work when the sequence of statements bracketed by calls to Acquire and Release include calls to another mutex lock.

Another significant difference is that Release now raises an exception if the caller is not the task that currently holds the lock. We cannot declare exceptions within protected objects so the exception Protocol_Error is declared within the package containing the declaration of the protected type.

Here's a little demonstration, including nested calls:

with Ada.Text_IO; use Ada.Text_IO;

with Blocking; use Blocking;

with System; use System;

with Ada.Dynamic_Priorities; use Ada.Dynamic_Priorities;

procedure Main is

task type Caller (Id : Positive; Urgency : System.Priority) with

Priority => Urgency;

Lock20 : Priority_Extending_Mutex (Ceiling => 20); -- arbitrary

Lock30 : Priority_Extending_Mutex (Ceiling => 30); -- arbitrary, as long as different from that of Lock20

procedure Show_Caller_Priority (Id : Positive; State : String);

-- display the priority for the task identified by Id at the point

-- indicated by State

task body Caller is

begin

for K in 1 .. 5 loop -- arbitrary number of iterations

Show_Caller_Priority (Id, "before Lock20.Acquire");

Lock20.Acquire;

Show_Caller_Priority (Id, "after Lock20.Acquire");

Lock30.Acquire;

Show_Caller_Priority (Id, "after Lock30.Acquire");

Lock30.Release;

Show_Caller_Priority (Id, "after Lock30.Release");

Lock20.Release;

Show_Caller_Priority (Id, "after Lock20.Release");

delay 1.0; -- just to see them interleave, since their priorities are different

end loop;

end Caller;

C1 : Caller (Id => 1, Urgency => 5);

C2 : Caller (Id => 2, Urgency => 8);

procedure Show_Caller_Priority (Id : Positive; State : String) is

begin

Put_Line ("Caller" & Id'Image & " executing " & State & " at priority" & Get_Priority'Image);

end Show_Caller_Priority;

begin

null;

end Main;The main procedure declares two of our special mutex objects, one with a ceiling priority of 20 and one with a ceiling of 30. The two values are arbitrary, but the point is that they should be different in order to illustrate the inheritance across nested calls. In addition, the main declares a task type named Caller, with two discriminants: one for uniquely identifying objects of the type, and one for specifying the priorities of those task objects. This is the same approach as was used for the protected type’s ceiling priority. Objects C1 and C2 are tasks of this type, with distinct identifiers and distinct priorities. These priorities are also arbitrary, as long as they are distinct from the ceiling priorities used for the mutex objects, again for the sake of illustration. As the callers execute, we will see their priorities vary, depending on where they are relative to the calls to the two protected objects.

Although we’ve shown how to extend priority inheritance for a mutex abstraction, the technique should be possible for most protected objects that follow the second idiom for applying protected types.

Notes

- The Ravenscar and Jorvik protocols both require the ceiling priority protocol we’ve described above. See this blog entry for further details.

- Neither the Ravenscar profile nor the Jorvik profile allow use of package Ada.Dynamic_Priorities.

- We said that we cannot use potentially blocking operations inside protected operations if we want the code to be portable. This is a portability issue because such usage might work correctly, as intended. If you are running on an operating system and protected object mutual exclusion is implemented using OS-defined locks rather than priorities, a blocking operation won't break the implementation because the lock remains held. If there's no runtime check emitted by the compiler for a blocking operation, it will work. (The check for blocking operations is not required by the core language.) But such code is not portable. Note that both Ravenscar and Jorvik require the check.

- The priority-based approach to providing mutual exclusive access for protected objects only works on a uniprocessor.