A Building Code for Building Code

by Yannick Moy –

If you can't make sense of the title of this post, you may need to read the recent article about it in Communications of the ACM. In this article, Carl Landwehr, a renowned scientific expert on security, defends the view that the software engineering community is doing overall a poor job at securing our global information system:

To a disturbing extent, however, the kinds of underlying flaws exploited by attackers have not changed very much. [...] One of the most widespread vulnerabilities found recently, the so-called Heartbleed flaw in OpenSSL, was [...] failure to apply adequate bounds-checking to a memory buffer.

and that this is mostly avoidable by putting what we know works to work:

There has been substantial progress in the past 20 years in the techniques of static and dynamic analysis of software, both at the programming language level and at the level of binary analysis. [...] It would be feasible for a building code to require evidence that software for systems of particular concern (for example, for self-driving cars or SCADA systems) is free of the kinds of vulnerabilities that can be detected automatically in this fashion.

to the point that most vulnerabilities could be completely avoided by design if we cared enough:

Indeed, through judicious choice of programming languages and frameworks, many kinds of vulnerabilities can be eliminated entirely. Evidence that a specified set of languages and tools had indeed been used to produce the finished product would need to be evaluated.

Shocking! Or so it should appear. But the reality is that we are now used to not being able to rely on software in our everyday lives. We're more than 10 years past the "Trustworthy Computing" initiative by Microsoft to counter the effect caused by the viruses Code Red and Nimda in 2001. We've had Stuxnet and Heartbleed since then, so we know we're not safe yet.

But Carl Landwehr is not just shooting in the dark. This article in CACM is the latest step in a coordinated effort to raise the awareness of the software engineering community on these matters. It started with an essay in 2013 in which he describes in more details his point that today we're building cathedrals of sand. The following sentence neatly summarizes what we're advocating as developers of the Ada and SPARK programming environments:

At least use development practices that minimize, and, where possible, rule out these kinds of flaws. Use programming languages that make buffer overflows infeasible. Use static and dynamic analysis techniques on software for which source code is available to confirm the absence of whole classes of flaws. The software won’t be perfect but it will be measurably better.

Of course, web development and the development of critical embedded software is not alike. Different domains will require different sets of programming languages and techniques. This is why Carl Landwehr organized a workshop last year to develop a Building Code for medical device software. The results of which were published in this report. Of particular interest in relation to the choice of programming language and analysis techniques is section B of that report on "Elements intended to avoid / detect / remove specific types of vulnerabilities at the implementation stage":

- Use of Memory-Safe Languages

- Language Subsetting

- Automated Memory Safety Error Mitigation and Compiler-Enforced Buffer Overflow Elimination

- Use of Secure Coding Standards

- Automated Thread Safety Analysis

- Automated Analysis of Programs (Source/Binary) for Critical Properties

- Accredited Cryptographic Algorithms and Implementation

- Modified Condition Decision Coverage

- Operational Use Case Identification and Removal of Unused Functions

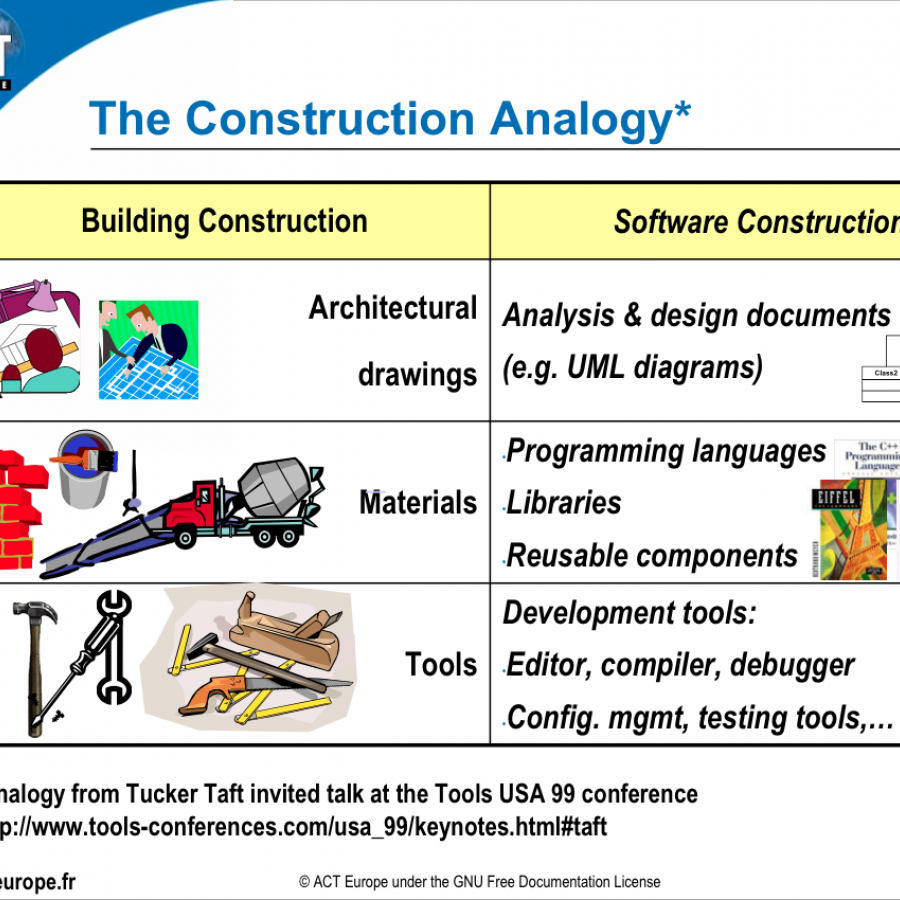

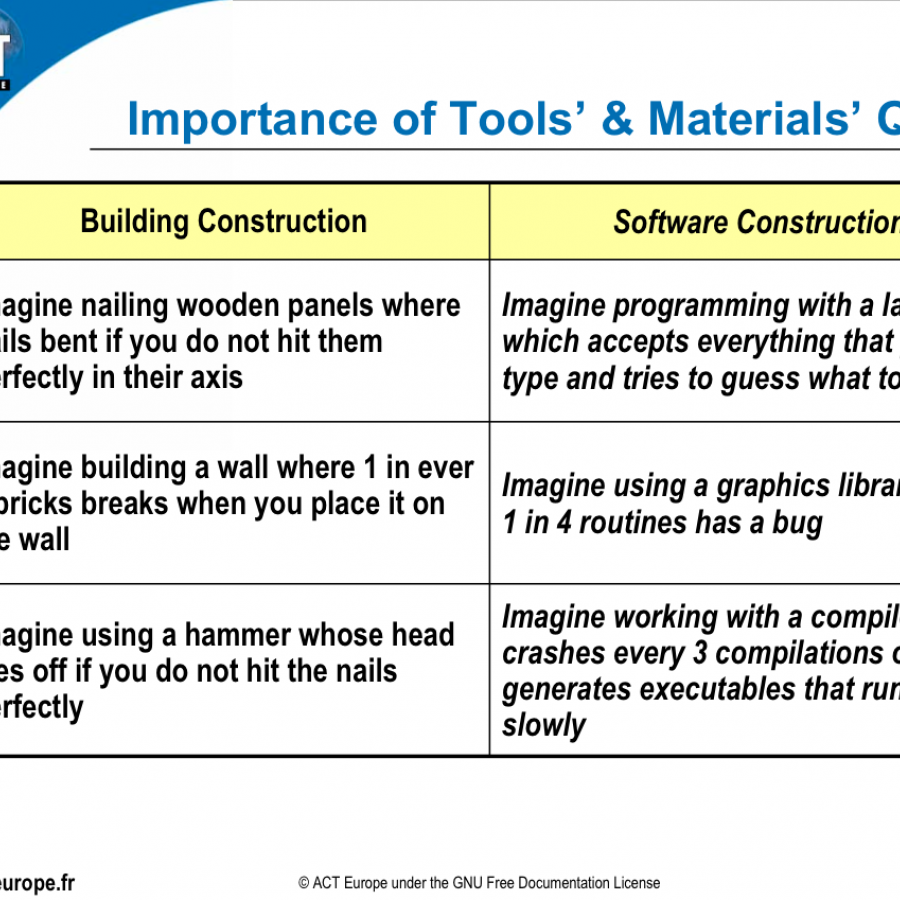

Ada and SPARK are ideally suited to address items 1 to 6, while items 7 to 9 are largely language independent (and thus apply equally well to Ada and SPARK as to other languages). This is no accident as these goals were explicit design goals for both languages. They do not need to be retrofitted after the fact in an ad-hoc and partial way. Funnily, the analogy between building construction and software construction on which Carl Landwehr 'builds' was already used in 2003 by Franco Gasperoni in slides for teaching Ada, and the footnote says it originally comes from a presentation from 1999 by Tucker Taft (Franco and Tuck are both members of AdaCore's executive team), just check these two slides:

The initial focus of Carl Landwehr on the medical device industry echoes the presentation from Professor Harold Thimbleby at the HIS conference last year, where he suggested the use of labels for software quality similar to the labels we have in Europe for energy consumption of home appliances. He even showed us a label he had made online, explaining that an interesting side effect of this regulatory constraint has been the need to update the initial scale (of A to G) with additional labels A+, A++ and A+++ to keep up with the advances in energy efficiency made by the industry. Indeed, who would like to rank B in a scale that starts at A+++? If I made my own B label for a drone collision avoidance software, would you be interested in buying it?