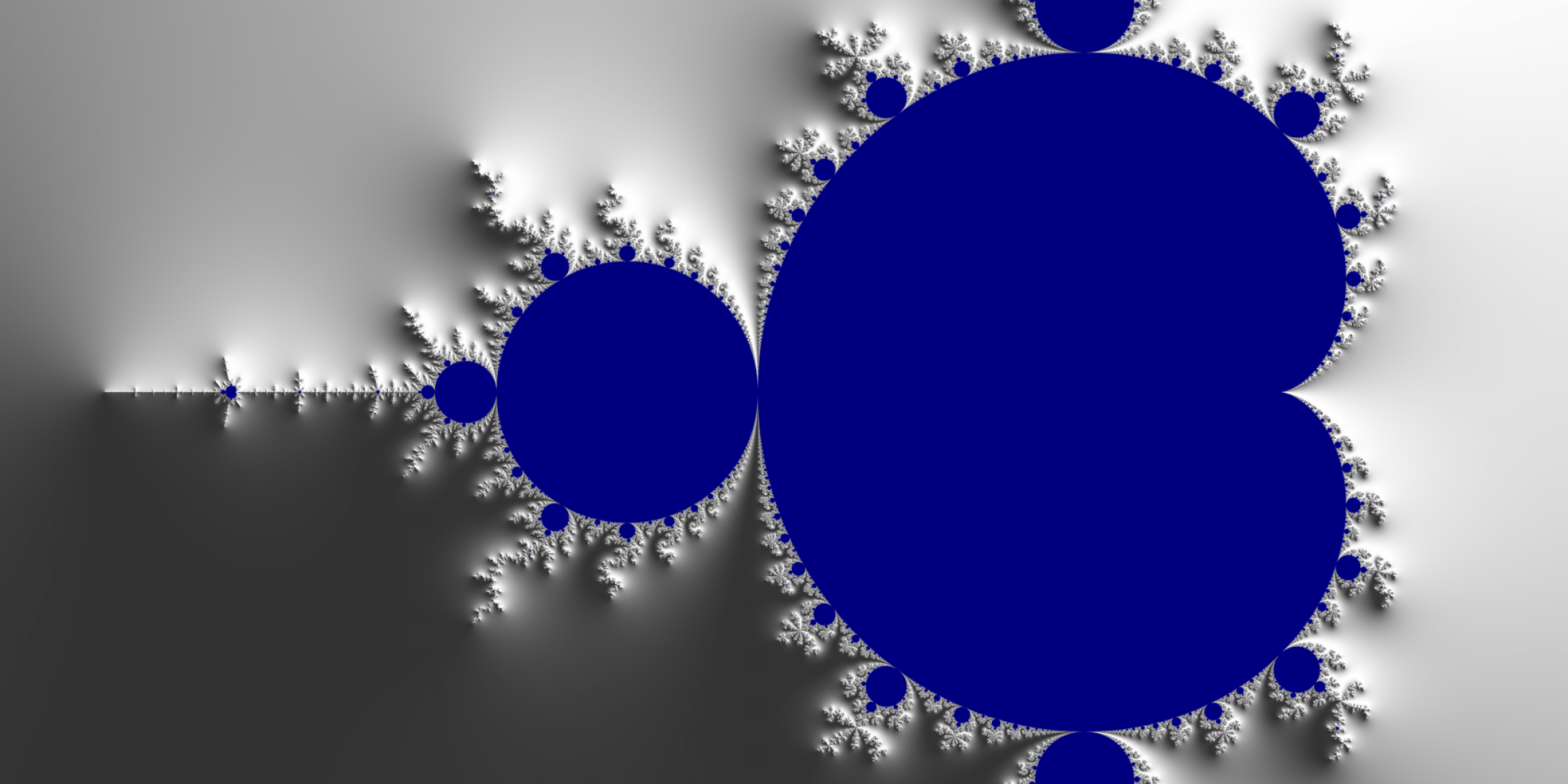

AdaFractal Part 2: Fixed Point and Floating Point Math Performance and Parallelization

by Rob Tice –

In Part 1 of this blog post I discussed why I chose to implement this application using the Ada Web Server to serve the computed fractal to a web browser. In this part I will discuss a bit more about the backend of the application, the Ada part.

Why do we care about performance?

The Ada backend will compute a new image each time one is requested from the front-end. The front-end immediately requests a new image right after it receives the last one it requested. Ideally, this will present to the user as an animation of a Mandelbrot fractal changing colors. If the update is too slow, the animation will look slow and terrible. So we want to minimize the compute time as much as possible. We have a few ways to do that: optimize the computation, and parallelize the computation.

Parallelizing the Fractal Computation

An interesting feature of the Mandelbrot calculation is that the computation of any pixel is independent of any other pixel. That means we can completely parallelize the computation of each pixel! So if our requested image is 1920x1280 pixels we can spawn 2457600 tasks right? Theoretically, yes we can do that. But it doesn’t necessarily speed up our application compared to, let’s say, 8 or 16 tasks, each of which computes a row or selection of rows. Either way, we know we need to create what’s called a task pool, or a group of tasks that can be queued up as needed to do some calculations. We will create our task pool by creating a task type, which will implement the actual activity that the task will complete, and we will use a Synchronous_Barrier to synchronize all of the tasks back together.

task type Chunk_Task_Type is

pragma Priority (0);

entry Go (Start_Row : Natural;

Stop_Row : Natural;

Buf : Stream_Element_Array_Access);

end Chunk_Task_Type;

type Chunk_Task is record

T : Chunk_Task_Type;

Start_Row : Natural;

Stop_Row : Natural;

end record;

type Chunk_Task_Pool is array (1 .. Task_Pool_Size) of Chunk_Task;

S_Task_Pool : Chunk_Task_Pool;

S_Sync_Obj : Synchronous_Barrier (Release_Threshold => Task_Pool_Size + 1);This snippet is from fractal.ads. S_Task_Pool will be our task pool and S_Sync_Obj will be our synchronization object. If you notice, each Chunk_Task_Type in the S_Task_Pool takes an access to a buffer in its Go entry procedure. In our implementation, each task will have an access to the same buffer. Isn’t this a race condition? Shouldn’t we use a protected object?

The downside of protected object

The answer to both of those questions, is yes. This is a race condition and we should be using a protected object. If you were to run CodePeer on this project, it identifies this as a definite problem. But using a protected object is going to destroy our performance. The reason for this is because under the hood, the protected object will be using locks each time we access data from the buffer. Each lock, unlock, and wait-on-lock call is going to make the animation of our fractal look worse and worse. However, there is a way to get around this race-condition issue. By design, we can guarantee that each task is going to access the buffer from Start_Row to Stop_Row. So, by design we can make sure that each task’s rows don’t overlap another task’s rows thereby avoiding a race condition.

Parallelization Implementation

Now that we understand the specification of the task pool, let’s look at the implementation.

task body Chunk_Task_Type

is

Start : Natural;

Stop : Natural;

Buffer : Stream_Element_Array_Access;

Notified : Boolean;

begin

loop

accept Go (Start_Row : Natural;

Stop_Row : Natural;

Buf : Stream_Element_Array_Access) do

Start := Start_Row;

Stop := Stop_Row;

Buffer := Buf;

end Go;

for I in Start .. Stop loop

Calculate_Row (Y => I,

Idx => Buffer'First +

Stream_Element_Offset ((I - 1) *

Get_Width * Pixel'Size / 8),

Buffer => Buffer);

end loop;

Wait_For_Release (The_Barrier => S_Sync_Obj,

Notified => Notified);

end loop;

end Chunk_Task_Type;The body of each Chunk_Task_Type waits for a Go signal from the main task. When it receives the go, it also receives an access to the buffer and the start and stop row to work on. It then calculates the pixels for the rows. When it finishes it calls the Wait_For_Release procedure of the Synchronous_Barrier object which blocks until all tasks have completed their work. Once all the tasks have checked in with the Synchronous_Barrier using the Wait_For_Release call, all tasks are released. The main task grabs the buffer with the new image and hands it back off to the AWS server to be sent out and the worker tasks go back to hanging on their entry calls.

The bigger the task pool…

Now we come back to the size of the task pool. Based on the implementation above we can chunk the processing up to the granularity of at least the number of rows being requested. So in the case of a 1920x1280 image, we could have 1280 tasks! But we have to ask ourselves, is that going to give us better performance than 8 or 16 tasks? The answer, unfortunately, is probably not. If we create 8 tasks, and we have an 8 core processor, we can assume that some of our tasks are going to execute on different cores in parallel. If we create 1280 tasks and we use the same 8 core processor, we don’t get much more parallelization than with 8 tasks. This is a place where tuning and best judgement will give you the best performance.

Fixed vs Floating Point

Now that we have the parallelization component, let’s think about optimizing the maths. In most fractal computations floating point complex numbers are used. Based on our knowledge of processors, we can assume that in most cases floating point calculations will be slower than integer calculations. So theoretically, using fixed point numbers might give us better performance. For more information on Ada fixed point types check out the Fixed-point types section of the Introduction to Ada course on learn.adacore.com .

The Generic Real Type

Because we are going to use the same algorithm for both floating and fixed point math, we can implement the algorithm using a generic type called Real. The Real type is defined in computation_type.ads.

generic

type Real is private;

with function "*" (Left, Right : Real) return Real is <>;

with function "/" (Left, Right : Real) return Real is <>;

with function To_Real (V : Integer) return Real is <>;

with function F_To_Real (V : Float) return Real is <>;

with function To_Integer (V : Real) return Integer is <>;

with function To_Float (V : Real) return Float is <>;

with function Image (V : Real) return String is <>;

with function "+" (Left, Right : Real) return Real is <>;

with function "-" (Left, Right : Real) return Real is <>;

with function ">" (Left, Right : Real) return Boolean is <>;

with function "<" (Left, Right : Real) return Boolean is <>;

with function "<=" (Left, Right : Real) return Boolean is <>;

with function ">=" (Left, Right : Real) return Boolean is <>;

package Computation_Type is

end Computation_Type;We can then create instances of the Julia_Set package using a floating point and fixed point version of the computation_type package.

type Real_Float is new Float;

function Integer_To_Float (V : Integer) return Real_Float is

(Real_Float (V));

function Float_To_Integer (V : Real_Float) return Integer is

(Natural (V));

function Float_To_Real_Float (V : Float) return Real_Float is

(Real_Float (V));

function Real_Float_To_Float (V : Real_Float) return Float is

(Float (V));

function Float_Image (V : Real_Float) return String is

(V'Img);

D_Small : constant := 2.0 ** (-21);

type Real_Fixed is delta D_Small range -100.0 .. 201.0 - D_Small;

function "*" (Left, Right : Real_Fixed) return Real_Fixed;

pragma Import (Intrinsic, "*");

function "/" (Left, Right : Real_Fixed) return Real_Fixed;

pragma Import (Intrinsic, "/");

function Integer_To_Fixed (V : Integer) return Real_Fixed is

(Real_Fixed (V));

function Float_To_Fixed (V : Float) return Real_Fixed is

(Real_Fixed (V));

function Fixed_To_Float (V : Real_Fixed) return Float is

(Float (V));

function Fixed_To_Integer (V : Real_Fixed) return Integer is

(Natural (V));

function Fixed_Image (V : Real_Fixed) return String is

(V'Img);

package Fixed_Computation is new Computation_Type (Real => Real_Fixed,

"*" => Router_Cb."*",

"/" => Router_Cb."/",

To_Real => Integer_To_Fixed,

F_To_Real => Float_To_Fixed,

To_Integer => Fixed_To_Integer,

To_Float => Fixed_To_Float,

Image => Fixed_Image);

package Fixed_Julia is new Julia_Set (CT => Fixed_Computation,

Escape_Threshold => 100.0);

package Fixed_Julia_Fractal is new Fractal (CT => Fixed_Computation,

Calculate_Pixel => Fixed_Julia.Calculate_Pixel,

Task_Pool_Size => Task_Pool_Size);

package Float_Computation is new Computation_Type (Real => Real_Float,

To_Real => Integer_To_Float,

F_To_Real => Float_To_Real_Float,

To_Integer => Float_To_Integer,

To_Float => Real_Float_To_Float,

Image => Float_Image);

package Float_Julia is new Julia_Set (CT => Float_Computation,

Escape_Threshold => 100.0);

package Float_Julia_Fractal is new Fractal (CT => Float_Computation,

Calculate_Pixel => Float_Julia.Calculate_Pixel,

Task_Pool_Size => Task_Pool_Size);We now have the Julia_Set package with both the floating point and fixed point implementations. The AWS URI router is set up to serve a floating point image if the GET request URI is “/floating_fractal” and a fixed point image is the request URI is “/fixed_fractal”.

The performance results

Interestingly, fixed point was not unequivocally faster than floating point in every situation. On my 64 bit mac, the floating point was slightly faster. On a 64 bit ARM running QNX the fixed point was faster. Another phenomenon I noticed is that the fixed point was less precise with little performance gain in most cases. When running the fixed point algorithm, you will notice what looks like dust in the image. That is integer overflow or underflow instances on the pixel. Under normal operation, these would manifest as runtime exceptions in the application, but to increase performance, I compiled with those checks turned off.

Fixed point - the tradeoff

Here’s the takeaway from this project: fixed point math performance is only better if you need lower precision OR a limited range of values. Keep in mind that this is an OR situation. You can either have a precise fixed point number with a small range, or a imprecise fixed point number with a larger range. If you try to have a precise fixed point number with a large range, the underlying integer type that is used will be quite large. If the type requires a 128 bit or larger integer type, then you lose all performance you would have gained by using fixed point in the first place.

In the case of the fractal, the fixed point is useless because we need a high precision with a large range. In this respect, we are stuck with floating point, or weird looking dusty fixed point fractals.

Conclusion

Although this was an interesting exercise to compare the two types of performances in a pure math situation, it isn’t entirely meaningful. If this was a production application, we would be optimizing the algorithms for the types being used. In this case, the algorithm was very generic and didn’t account for the limited range or precision that would be necessary for an optimized fixed point type. This is why the floating point performance was comparable in most cases to the fixed point with better visual results.

However, there are a few important design paradigms that were interesting to implement. The task pool is a useful concept for any parallelized application such as this. And the polymorphism via generics that we used to create the Real type can be extremely useful to give you greater flexibility to have multiple build or feature configurations.

With Object Oriented programming languages like Ada and C++ it is sometimes tempting to start designing an application like this using base classes and derivation to implement new functionality. In this application that wouldn’t have made sense because we don’t need dynamic polymorphism; everything is known at compile time. So instead, we can use generics to achieve the polymorphism without creating the overhead associated with tagged types and classes.